Building Medical Domain Multimodal AI Model with Large Language Models

Aligning multimodal medical information with large language models to create a universal multimodal medical LLM assistant.

Used Skills: Neural Network Pipeline, NLP, Large Language Models, Multi-modality, Prompt Engineering, Fine-tuning

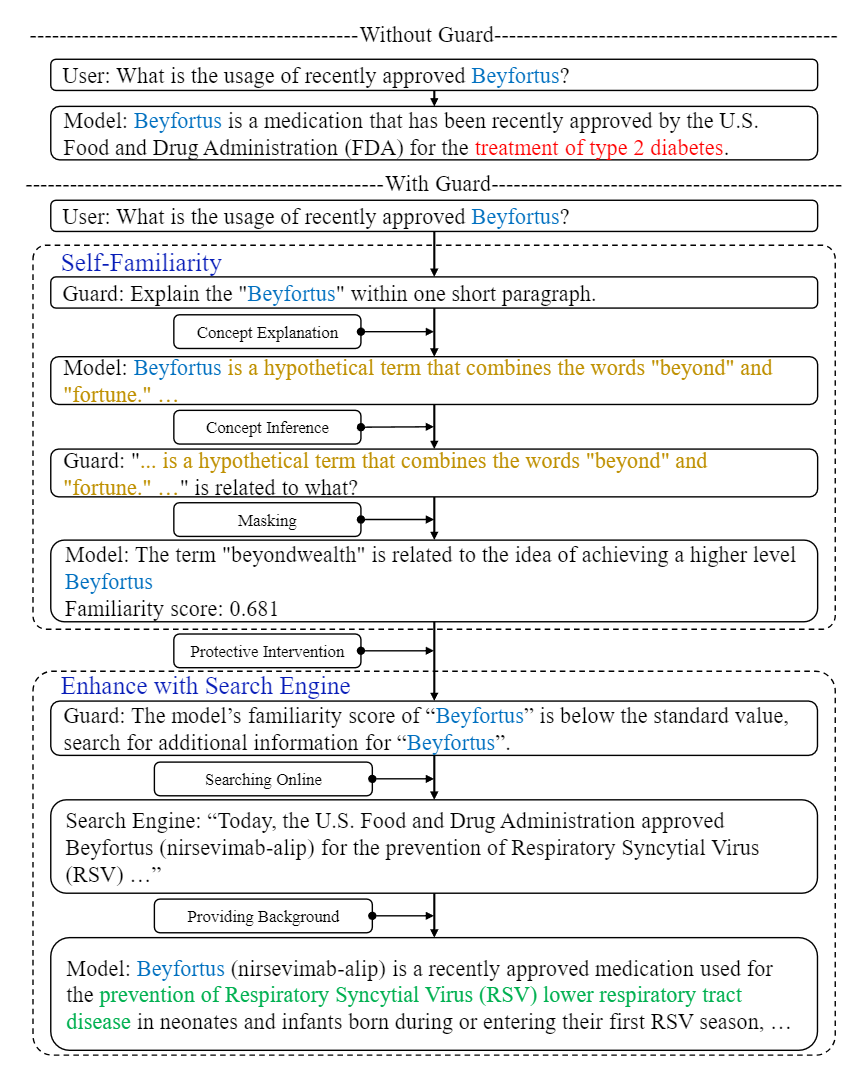

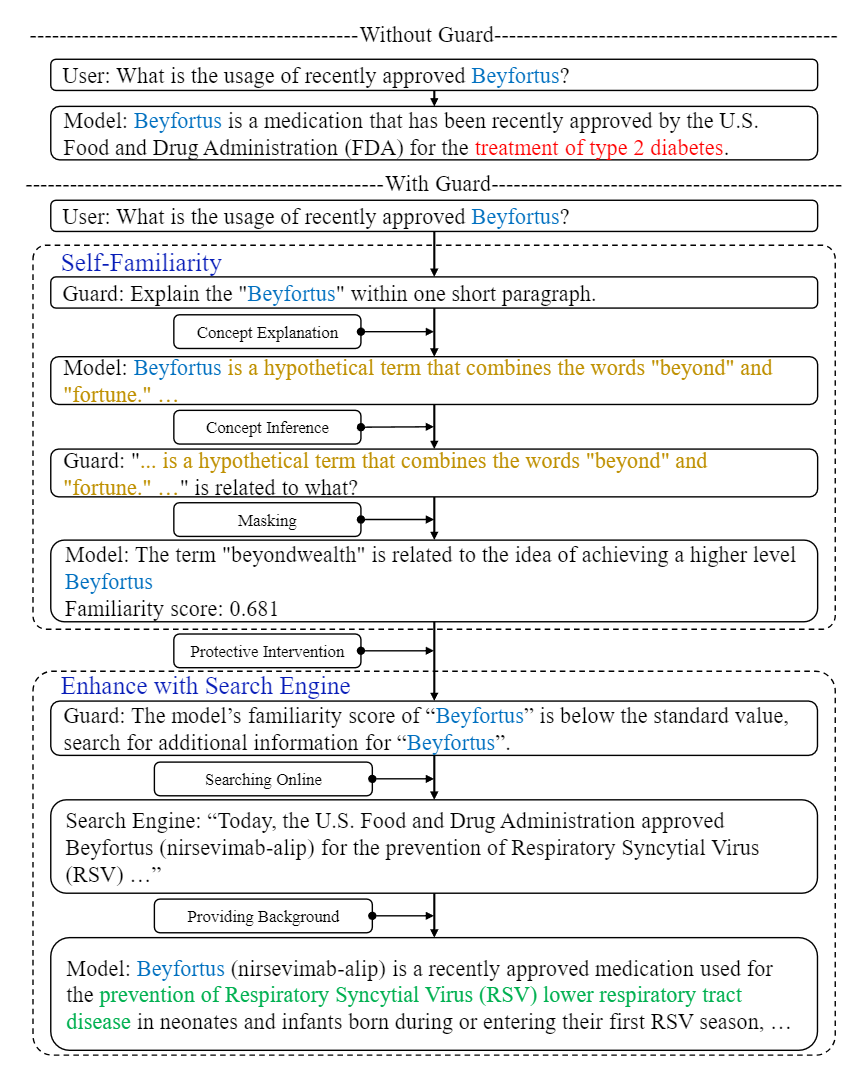

Evaluating Inner Knowledge of Large Language Models

Using prompt engineering to perform self-evaluation under the zero-resource setting to test the understanding of LLMs to the instructions

Used Skills: Neural Network Pipeline, NLP, Large Language Models, Constrained Beam Search, Prompt Engineering

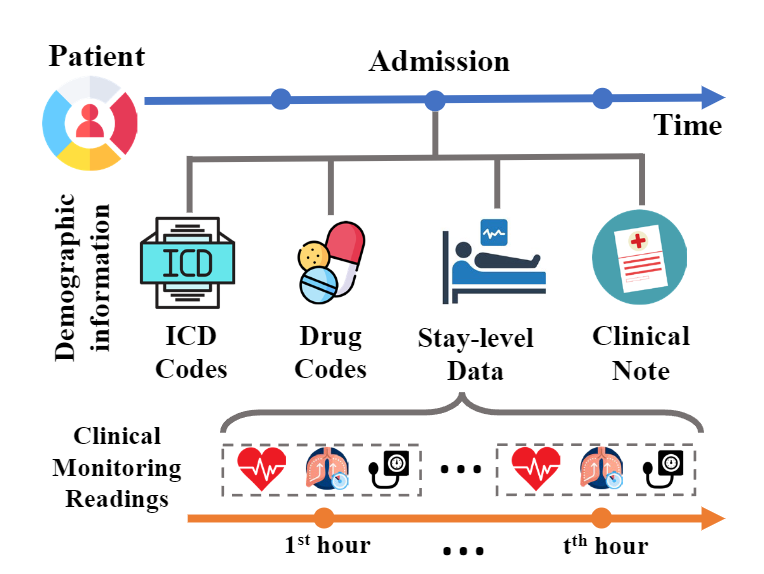

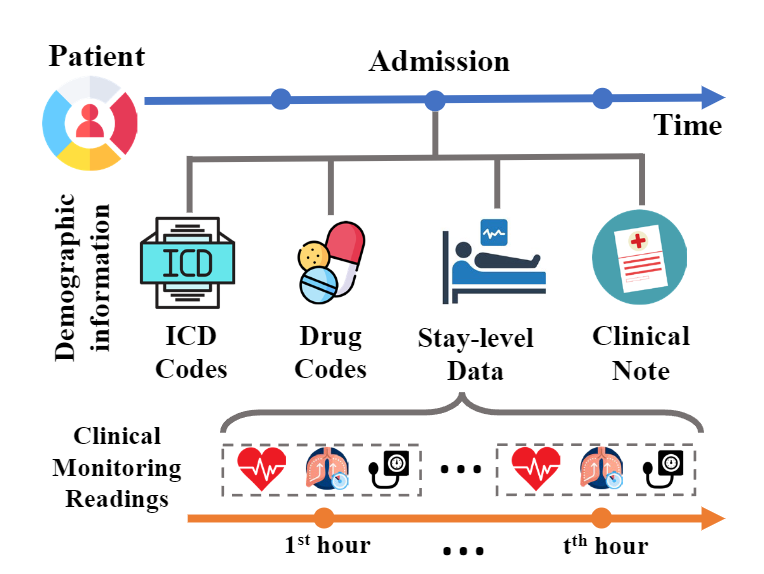

Multi-modality Pre-training of EHR Data

Developing a novel, multi-modal, and unified pretraining framework called MEDHMP for multi-modality health data pre-training.

Used Skills: Multi-modality, Pre-training, Pre-trained Language Model, Self-supervised Learning, Representation Learning, EHR, ICD Codes

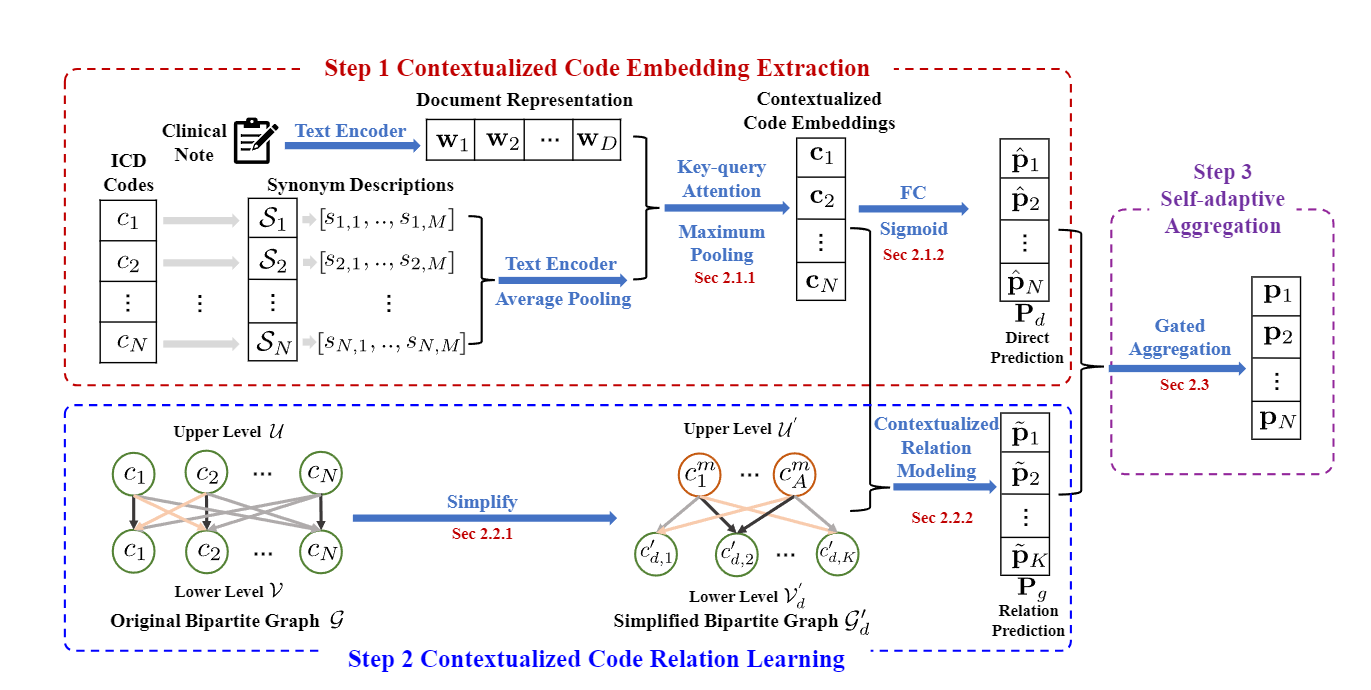

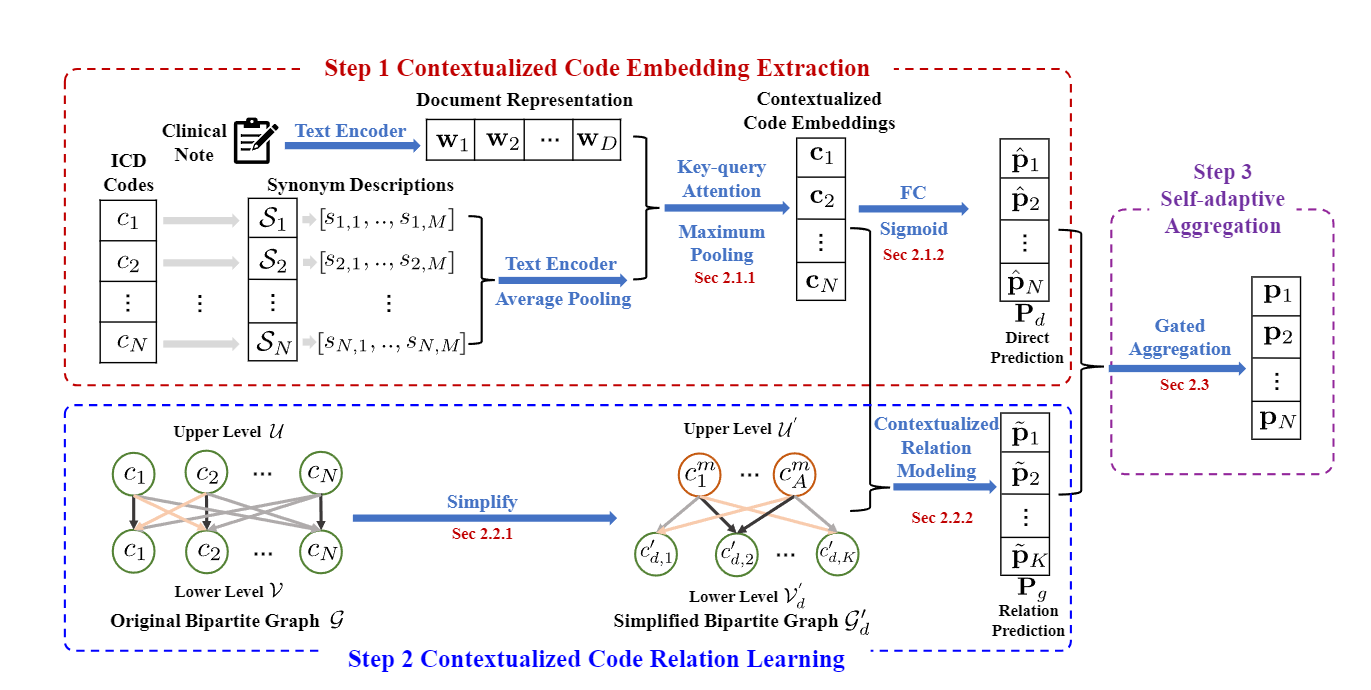

CoRelation: Boosting Automatic ICD Coding Through Contextualized Code Relation Learning.

Improving ICD coding performance through modeling contextualized code relations through graph network.

Used Skills: Bi-LSTM, Graph Attention Network, Synonym Fusion, ICD Coding

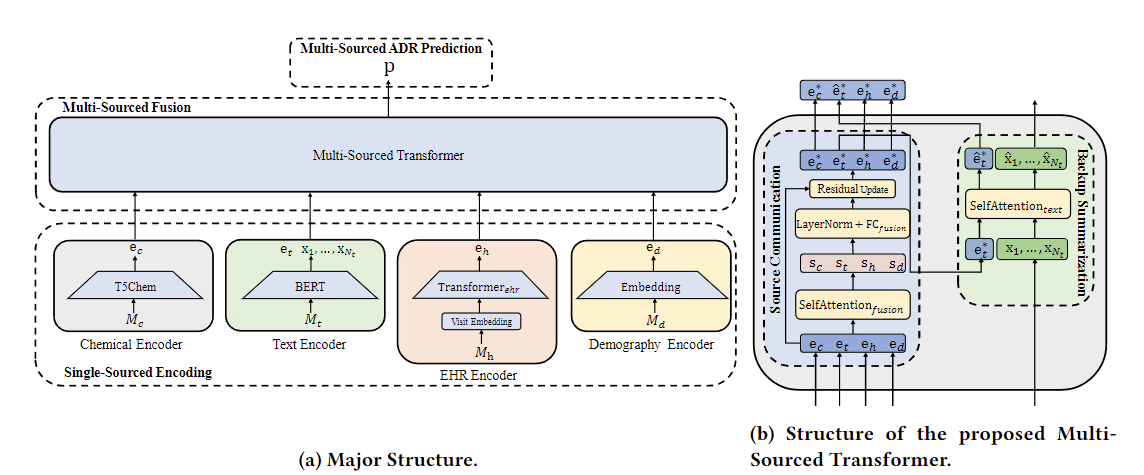

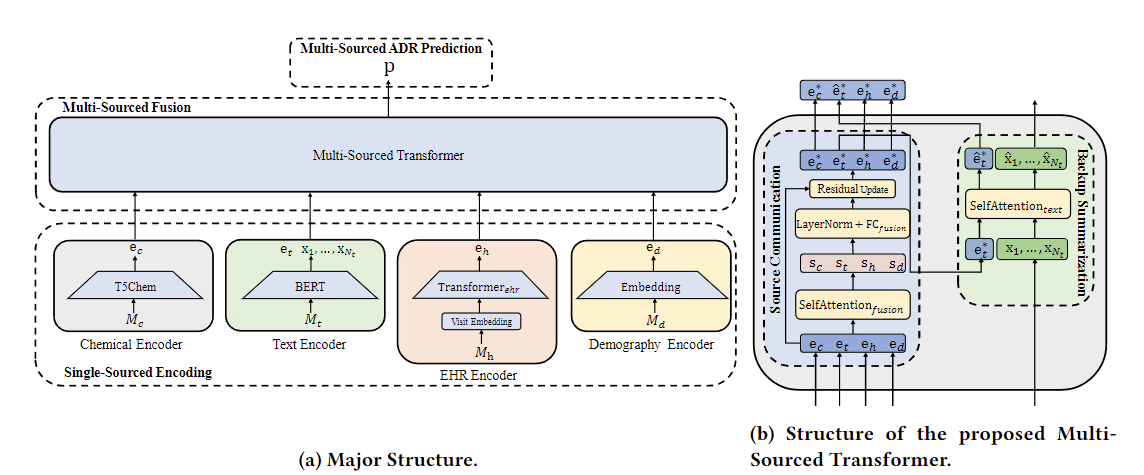

pADR: Personalized Adverse Drug Reaction Prediction

Incorporating the patient's EHR modality with the drug molecular level information to predict the potential adverse reaction.

Used Skills: Pre-trained Language Models, Transformers, Multi-modality, SMILES Chemical Presentation, EHR, ICD codes, Adverse Event Prediction

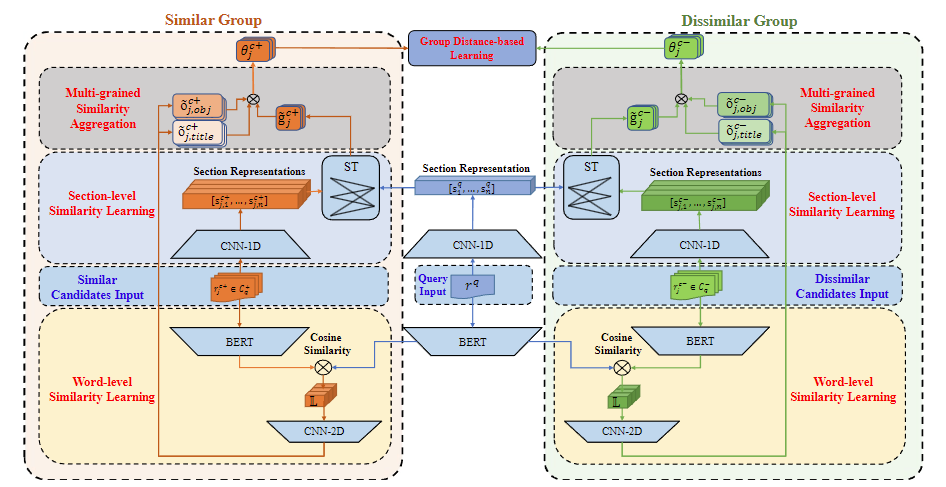

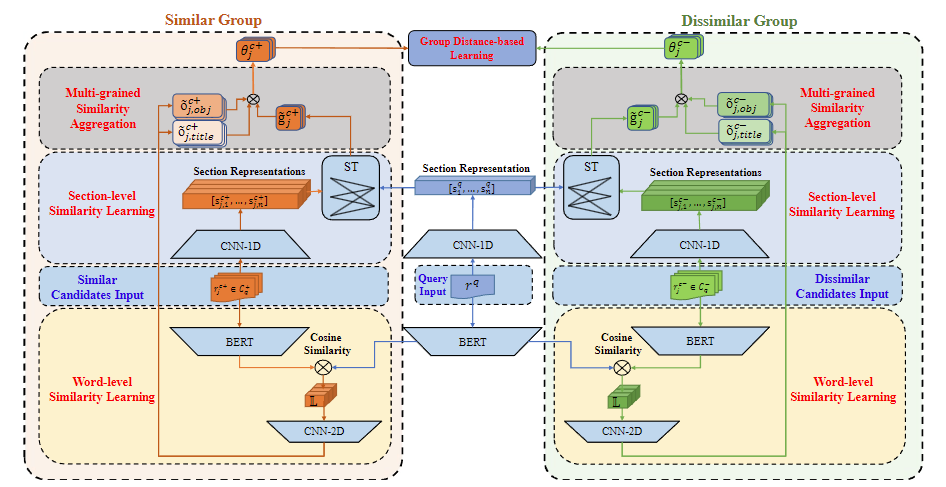

Clinical Trial Retrieval

Deigning hierarchical matching model for trial protocols with novel group-based training loss and 2D word matching.

Used Skills: NLP, Transformers, Convolutional Network, Group Loss, Hierarchical Attention, Information Retrieval

Code not available.

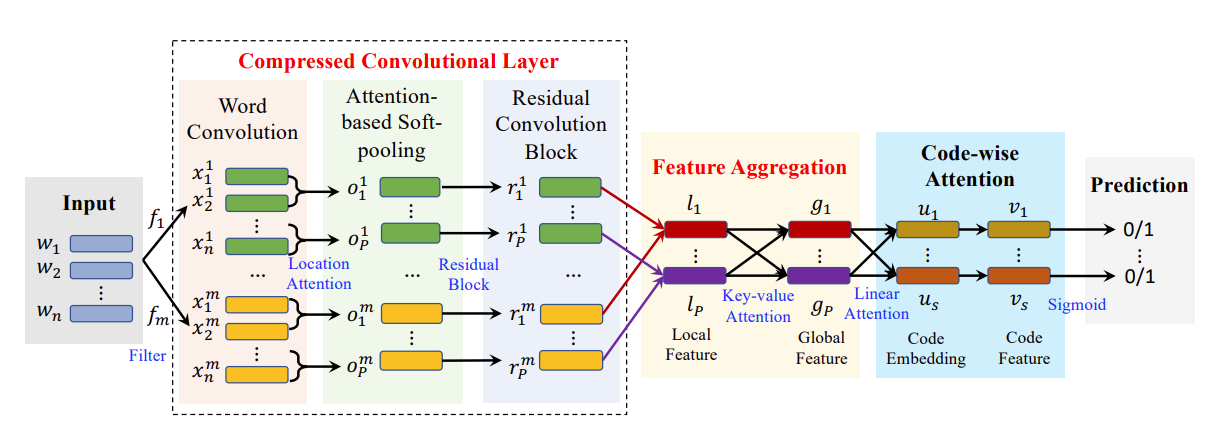

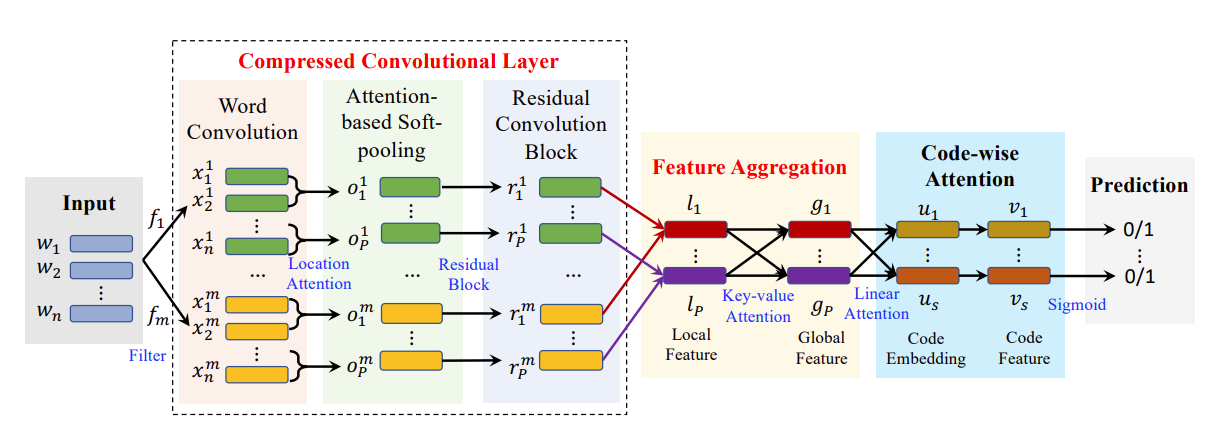

Fusion: Automated ICD Coding

Using information compression to reduce the clinical note noise and improve the speed of automatic ICD coding.

Used Skills: Transformers, NLP, ICD Coding

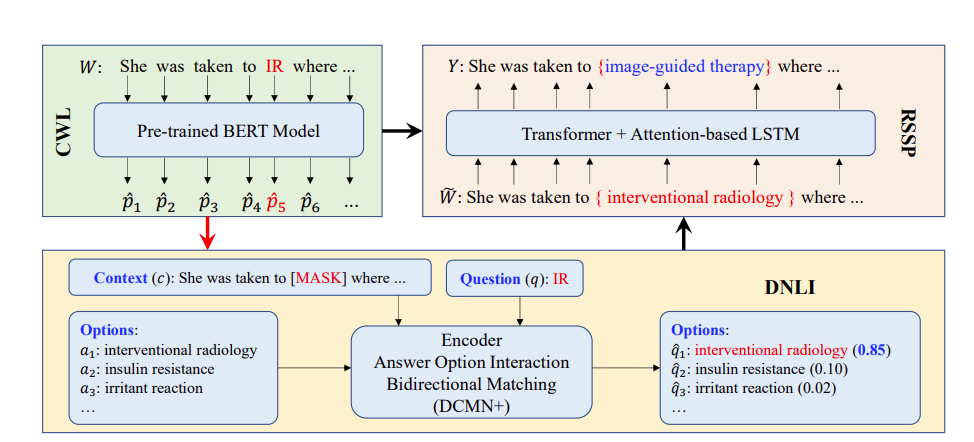

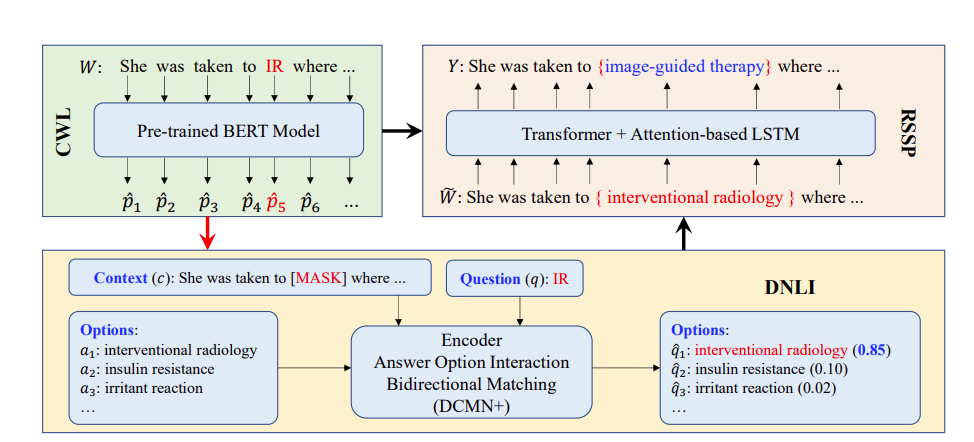

Benchmarking Automated Clinical Language Simplification

Designing a controllable medical term simplification pipeline for using external medical dictionary knowledge.

Used Skills: Neural Network Pipeline, NLP, Question Answering, Constrained Generation, External Knowledge Injection

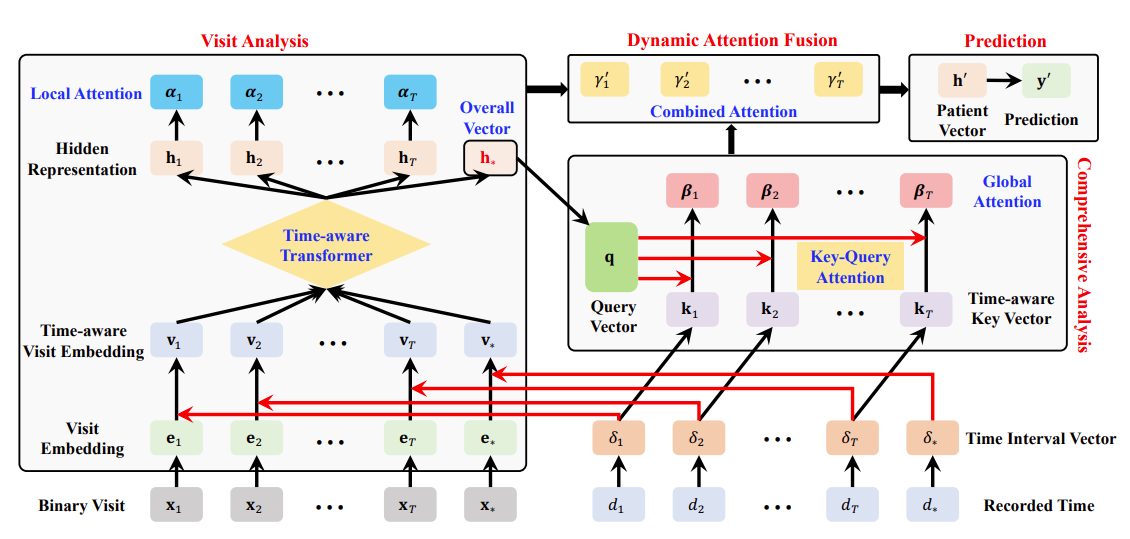

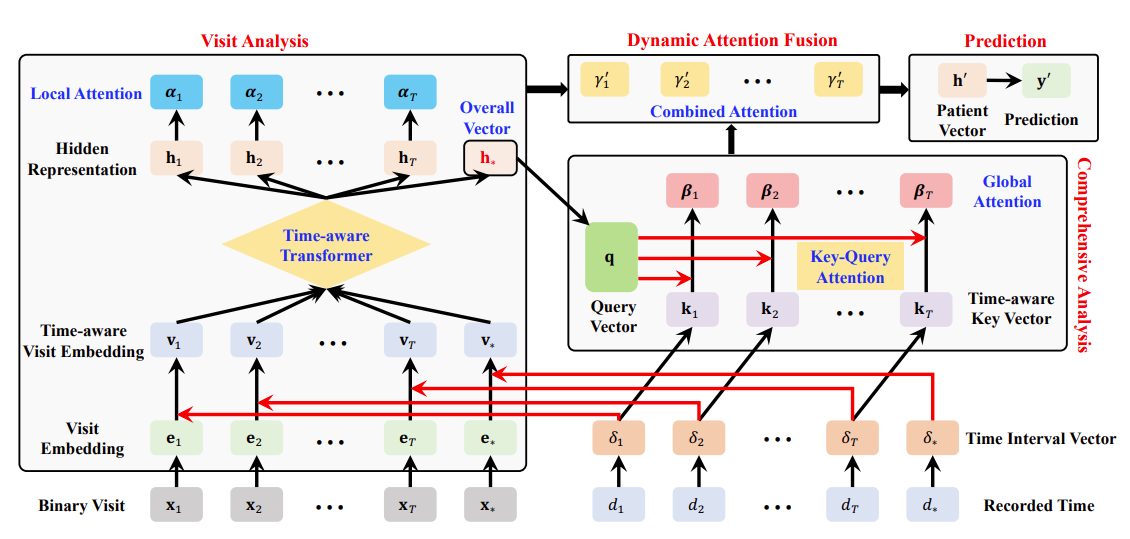

HiTANet

Using two-level transformers to model the complex EHR code sequential data to predict future diseases.

Used Skills: Transformers, Time-aware Attention, EHR, ICD Codes, Disease Prediction

Structure Finding for Chart Design

The project aims at using automatic rule based approaches to find the potential structures in chart designs and extracts them to generate templates for users.

Used Skills: Sequential Matching

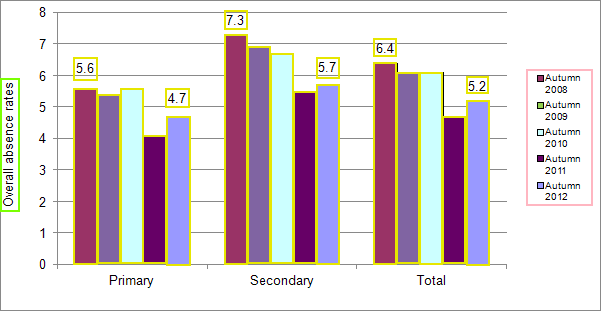

Object Detection for Chart Objects

Creating new approaches to detect chart objects. Unlike traditional common object detection, the chart data are highly homogeneous and abnormal in length-width ratio, which bring huge difficulties to the detection. Hence a new approachis needed for this new special situation

Used Skills: Computer Vision, Object Detection, Point Detection

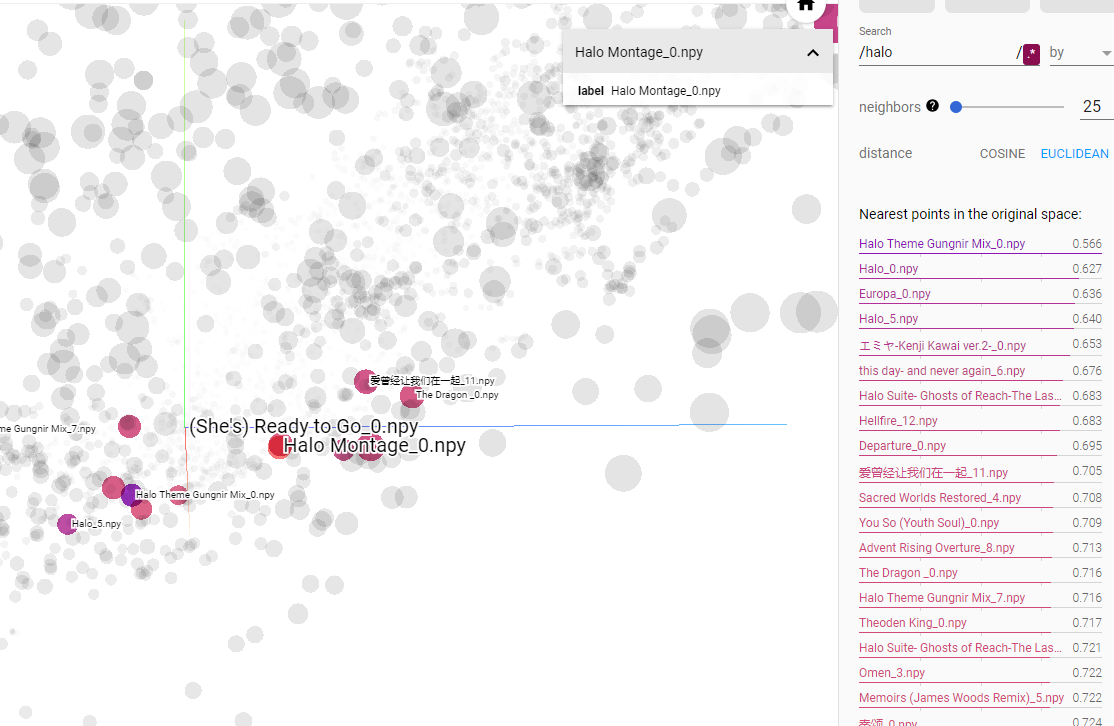

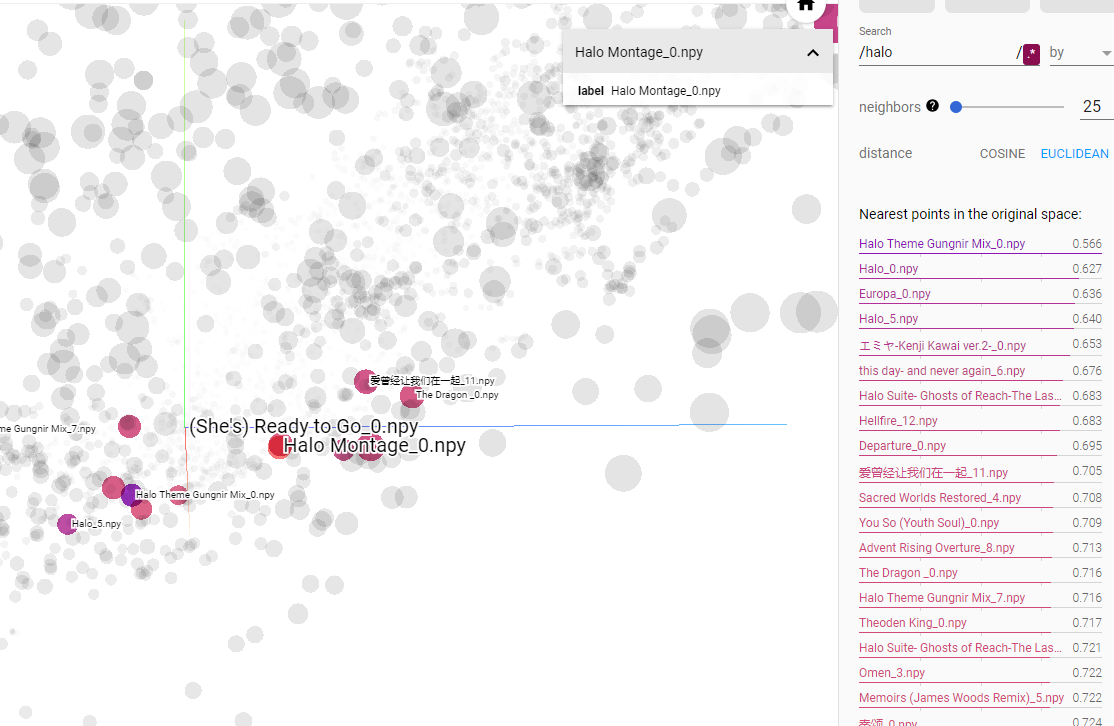

Pytorch Content Based Music Recommendation System

Inspired from general text embedding approaches and is based on clustering idea.

Used Skills: Masked Pre-training, Fourier transform

Tensorflow pix2pix With Color Assign

An improved Pix2pix network that can assign the desired generated color based on a color mask. The original structure is divided into two parts including texture network and coloring network.

Used Skills: GAN, Computer Vision

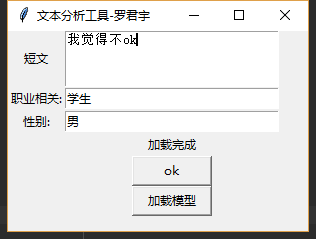

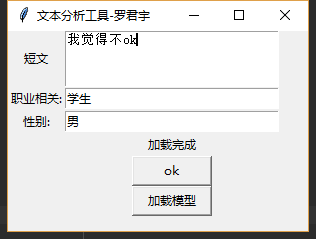

Chinese Twitter Author Info Analysis System

Using net spider to collect short messages from social network and training an ID classier based on LSTM Cell to automatically classify users’ social backgrounds according to their short messages.

Used Skills: Pre-trained Language Models, Text Classfication, Web Spider

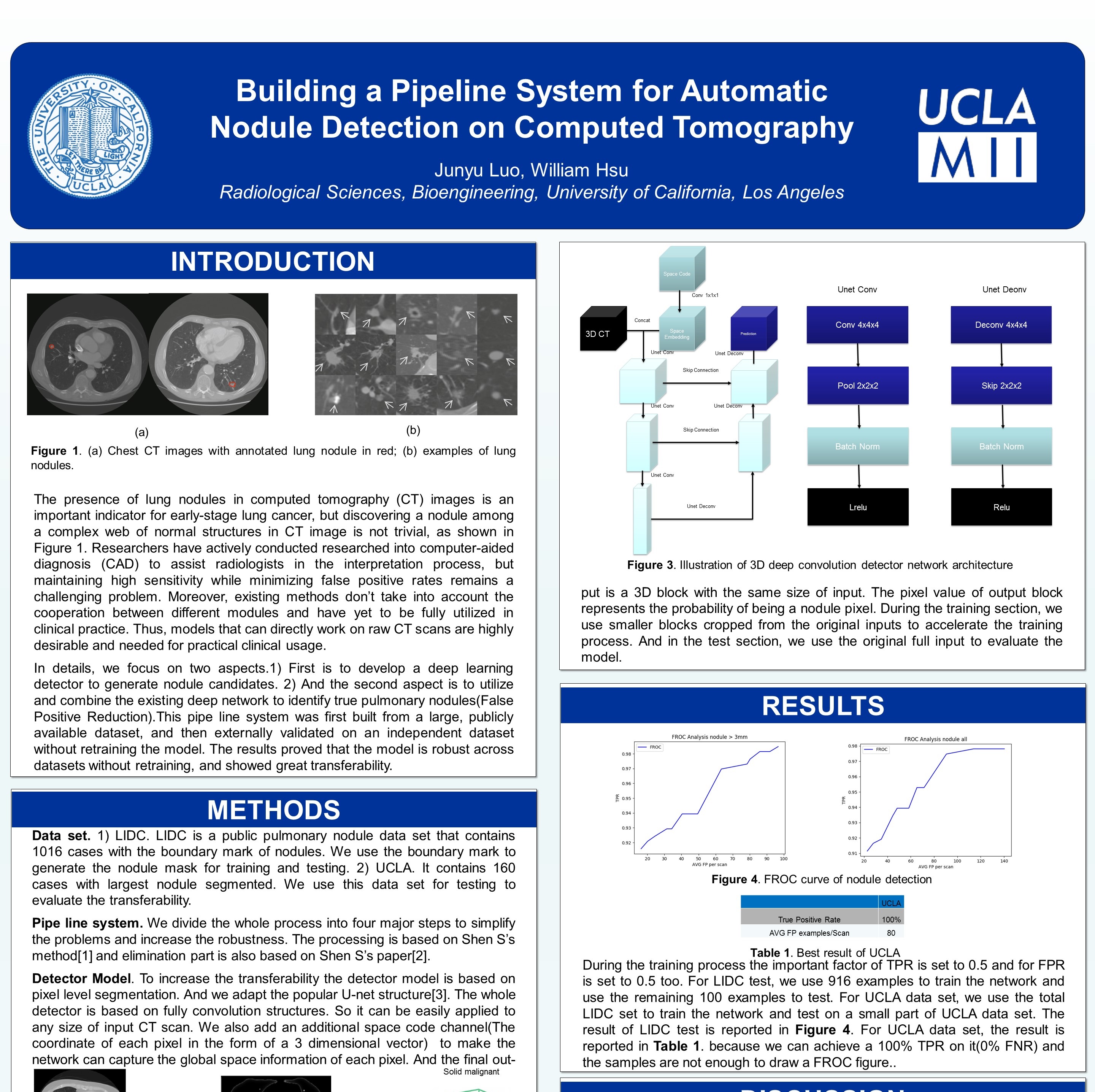

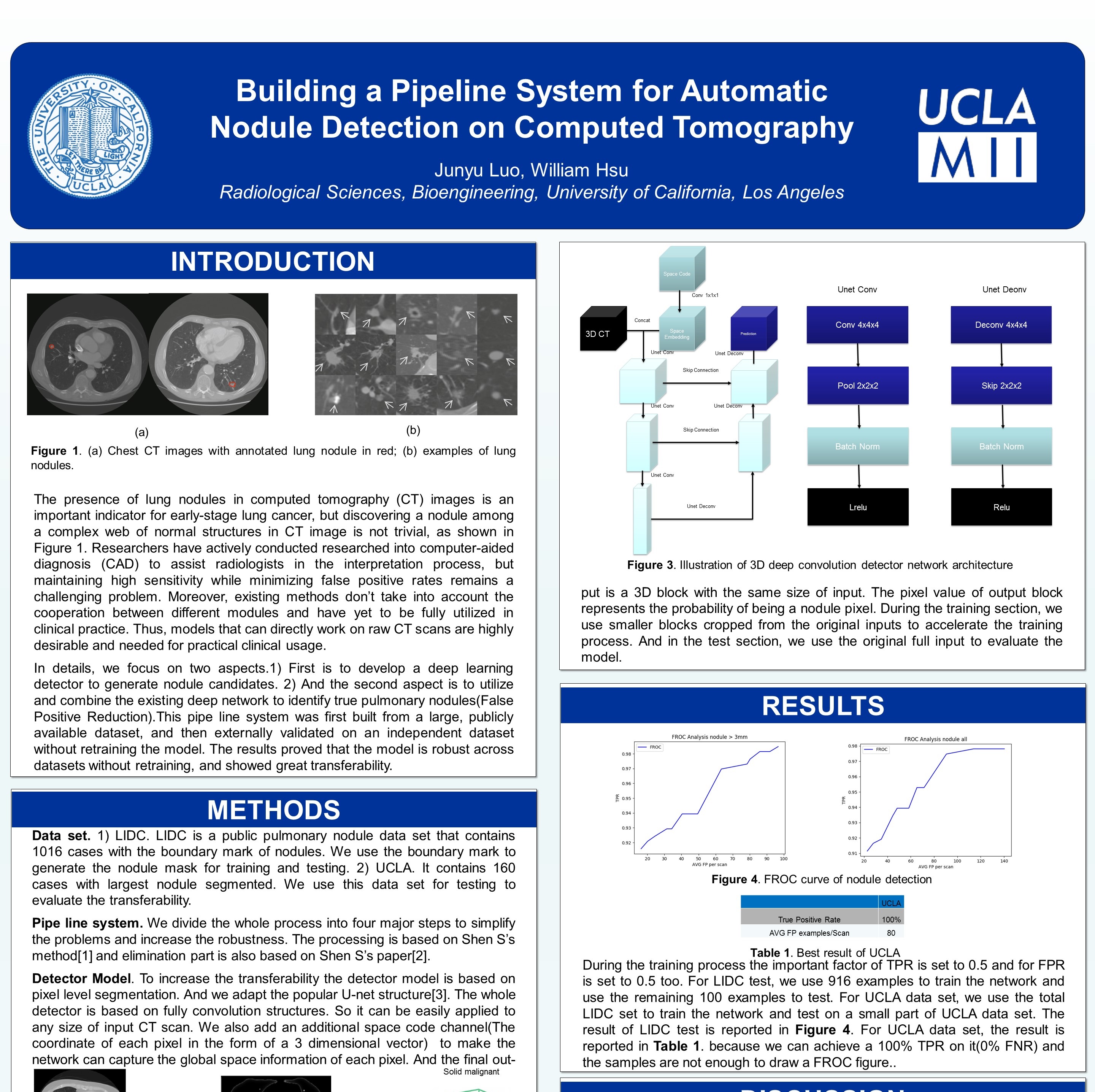

Pipeline System for Pulmonary Nodule nalysis

The project aims at using automatic approaches to help radiologists to analysis pulmonary nodules based on CT images.

Used Skills: Object Detection, CT images, Image Classfication

Code not available.